How to Choose an NVIDIA GPU for Deep Learning in 2023: Ada, Ampere, GeForce, NVIDIA RTX Compared - YouTube

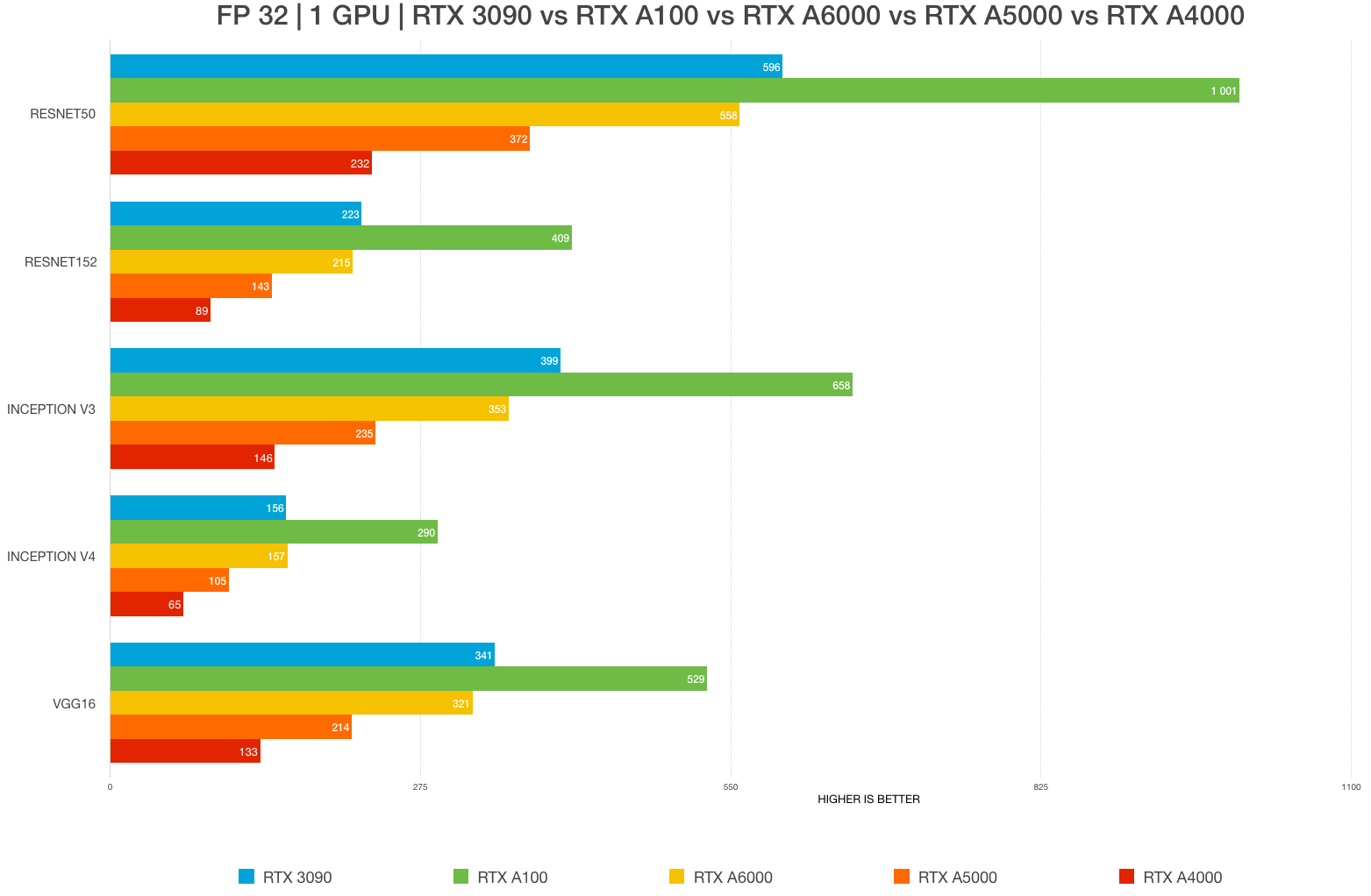

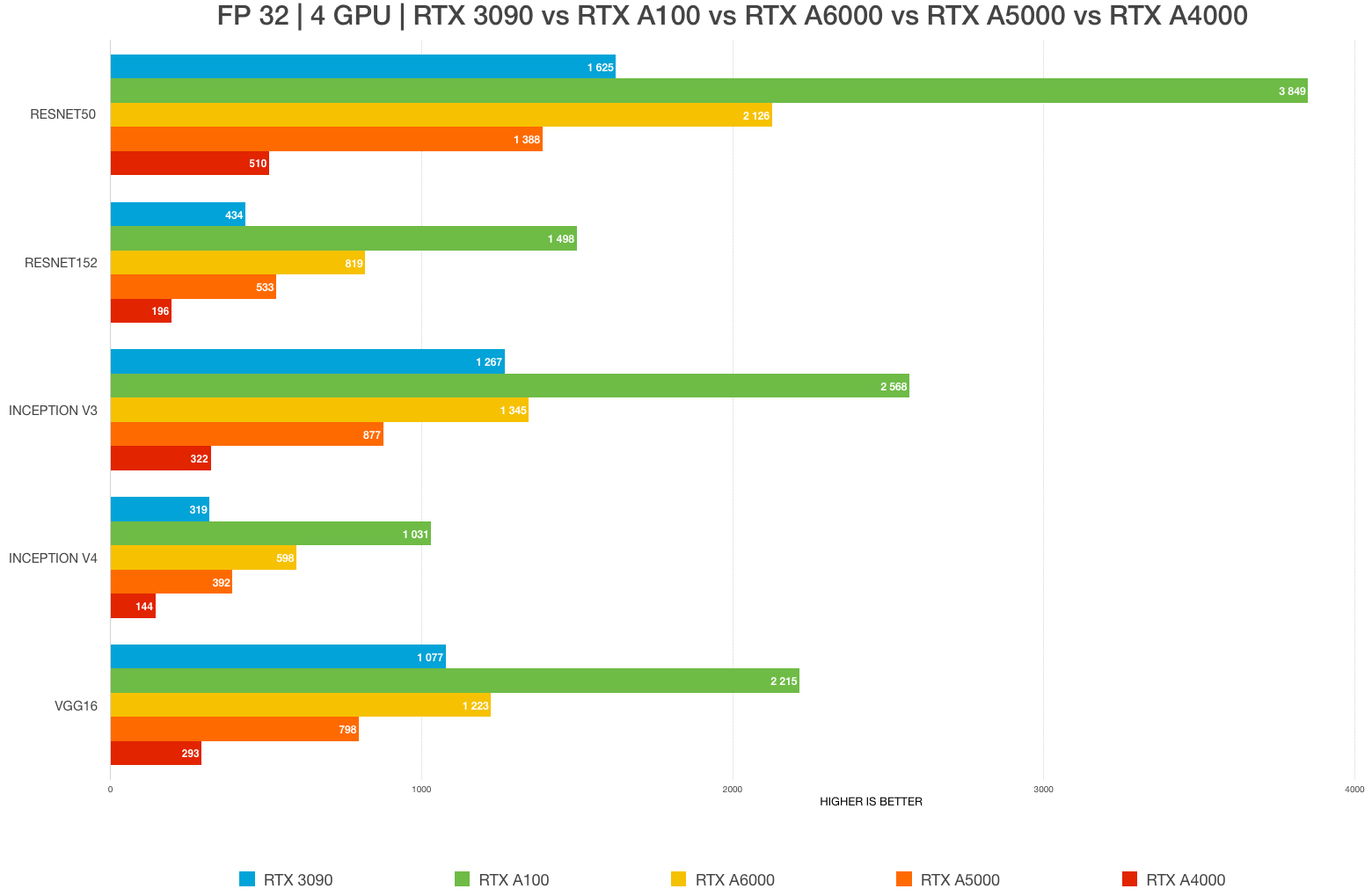

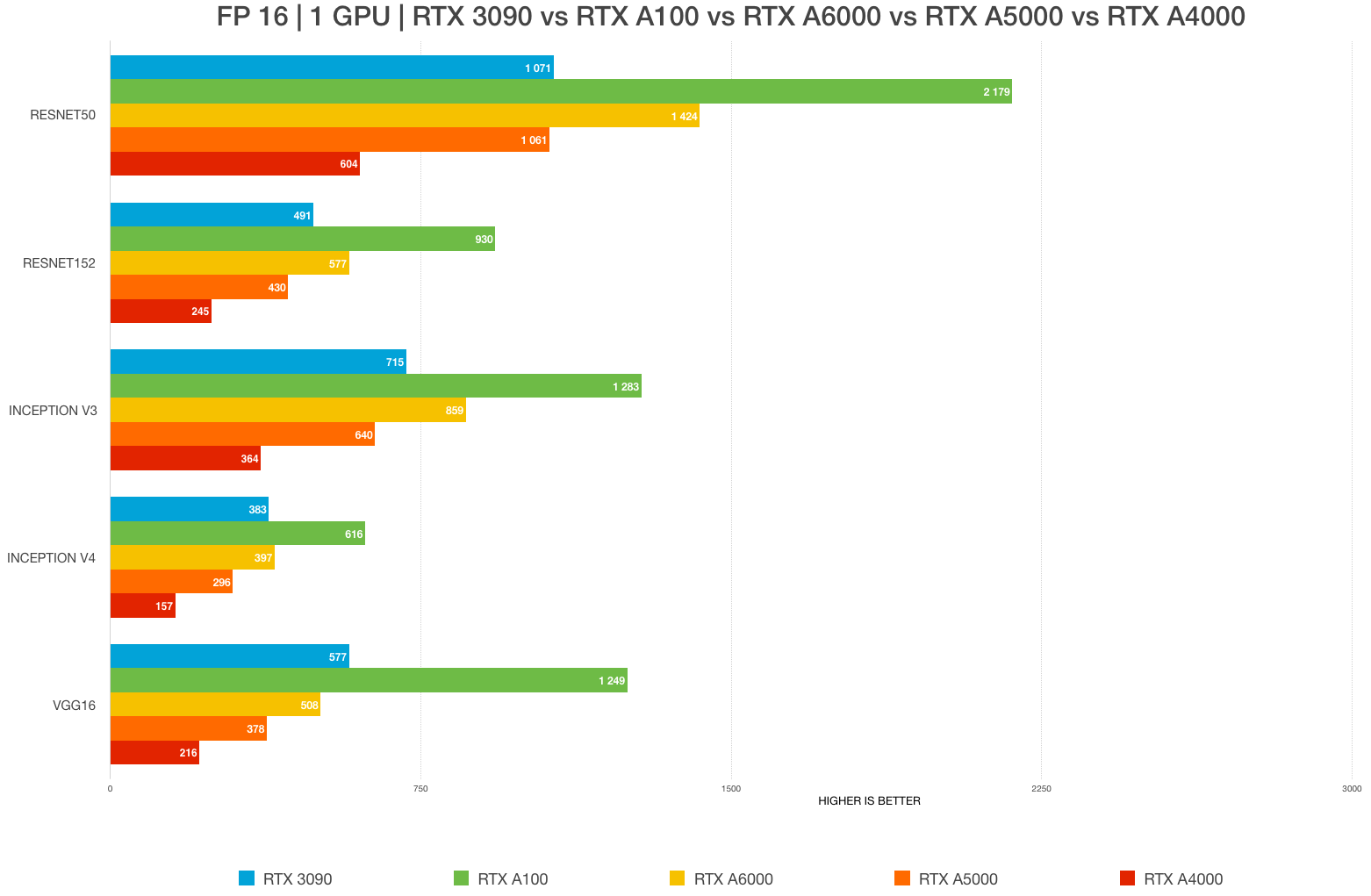

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

Best GPU for AI/ML, deep learning, data science in 2023: RTX 4090 vs. 3090 vs. RTX 3080 Ti vs A6000 vs A5000 vs A100 benchmarks (FP32, FP16) – Updated – | BIZON

![Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE] Best GPUs for Deep Learning (Machine Learning) 2021 [GUIDE]](https://i1.wp.com/saitechincorporated.com/wp-content/uploads/2021/06/maxresdefault.jpg?resize=580%2C326&ssl=1)